Analyzing Indexing and Crawling Issues with AI

Getting your website to rank in search results isn’t just about having great content—it’s also about making sure search engines can find and understand your pages. If crawling or indexing issues arise, search engines might struggle to access your content, meaning your pages could remain invisible to potential visitors. Understanding how to monitor and fix indexing and crawling problems is essential for maintaining your site’s visibility.

In this guide, we’ll break down the common roadblocks that can prevent your pages from showing up in search results, show you how to track these issues in Google Search Console, and explore how AI-powered tools—like ChatGPT—can help you analyze and resolve them efficiently.

The topics covered in this guide include:

- How to Monitor Indexing and Crawling with Google Search Console

- Common Indexing and Crawling Issues

- Using AI Chatbots to Analyze Google Search Console Data

- What is a Crawl Budget and Why is It Important?

How to Monitor Indexing and Crawling with Google Search Console

Google Search Console (GSC) is an essential tool for tracking how Google interacts with your website. It allows you to monitor your site’s indexing and crawling status, helping you identify and resolve issues before they impact your site’s visibility. To check your indexing and crawling status, focus on three key sections: Overview, Performance, and Pages.

Overview

To start, you need to get an overall understanding of your site's health in Google Search Console. The Overview page is the first place to check, as it provides a summary of critical issues affecting your site. This page offers insights into whether your site is being indexed correctly and highlights any major issues, such as crawl errors or security problems.

Performance

The Performance report shows you how well your site is performing in search results. It provides data on:

- Clicks: How many times users clicked on your site’s link in search results.

- Impressions: How often your site appeared in search results.

- Click-Through Rate (CTR): The percentage of clicks per impression, which can indicate the relevance of your pages.

- Average Position: The average ranking of your site’s pages in search results.

While this report doesn’t directly indicate indexing or crawling issues, it can reveal patterns that suggest problems.

Pages

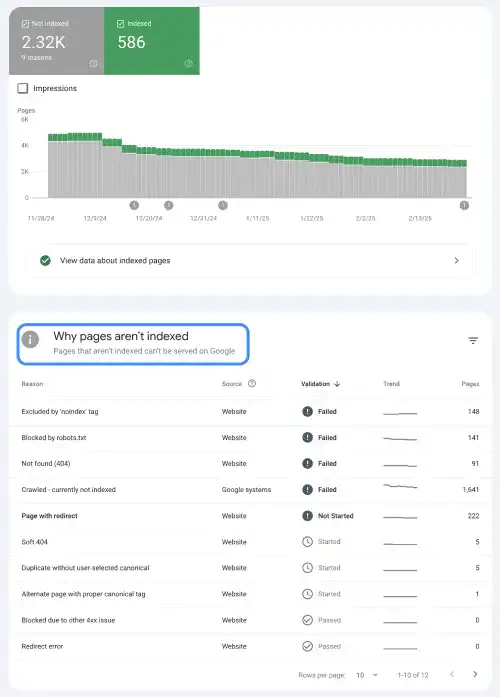

The Pages section in Google Search Console is crucial for identifying indexing and crawling issues. It provides a detailed view of each page's status, including:

- Indexed Pages: Pages that Google has successfully crawled and indexed.

- Not Indexed Pages: Pages that weren't indexed

for various reasons, such as being blocked by the

noindextag, robots.txt, or returning errors like 404 or redirect issues (details will be explained in the next section).

Here, you can check the specific reasons why some pages aren’t indexed, such as missing meta tags, broken links, or other crawl issues. We’ll explore the “Why pages aren’t indexed” section in more detail later, where you’ll learn how to address specific issues.

Common Indexing and Crawling Issues

Interpreting Google Search Console data can be challenging, especially if you're new to SEO. Here are quick explanations of some common issues you may encounter:

- Blocked by robots.txt: If your site’s robots.txt file blocks search engines from accessing certain pages, those pages will not be crawled or indexed. This can prevent important content from appearing in search results.

-

Excluded by

‘noindex’ Tag:

The

noindexmeta tag tells search engines not to index a page. While useful for non-relevant pages (e.g., login pages), it can be problematic if you accidentally apply it to pages you want to be indexed. - Not Found (404): Pages that return a 404 error indicate that the page couldn’t be found. Broken links or deleted pages often cause this issue, leading to missed indexing opportunities.

- Crawled - Currently Not Indexed: Sometimes, Googlebot may crawl a page but not index it. This could happen due to factors like low-quality content, duplication, or even temporary issues on Google’s side.

- Page with Redirect: Redirects are commonly used to guide users and search engines to the correct page. However, improperly configured redirects (like 301 or 302 redirects) can create crawl issues or confuse Googlebot, preventing proper indexing.

- Soft 404: A soft 404 error occurs when a page that doesn’t exist returns a "not found" message but doesn’t trigger the correct 404 status code. This can confuse search engines, making them think the page still exists.

-

Duplicate

Without User-Selected Canonical: Duplicate content can confuse search engines about which

page to index. If you have identical or similar pages, it's important to use

the

rel=canonicaltag to tell search engines which version is the preferred one. - Blocked Due to Other 4xx Issues: Errors like 403 (Forbidden) or 401 (Unauthorized) block search engine crawlers from accessing certain pages, preventing them from being indexed.

- Alternate Page with Proper Canonical Tag: In some cases, multiple versions of the same content exist, such as a printer-friendly page. By setting the correct canonical tag, you inform search engines which version of the page should be indexed.

- Redirect Error: Redirects can sometimes fail, either by pointing to incorrect URLs or creating infinite redirect loops. These issues can prevent search engines from properly crawling and indexing your pages.

Even with these explanations, you may still be unsure about what they mean or how to resolve them. One of the best practices is to leverage AI chatbots to clarify the issues and provide actionable solutions. In the next section, we’ll explore how to use AI chatbots to analyze indexing and crawling issues and get recommendations for resolution.

Using AI Chatbots to Analyze Google Search Console Data

AI tools like ChatGPT can be invaluable in helping you analyze and interpret data from Google Search Console. By leveraging AI, you can simplify the process and gain actionable insights. ChatGPT can help you identify patterns and recommend specific actions to resolve issues in your site's indexing and crawling processes.

Step 1: Submitting the Overall Report for Indexing Issues

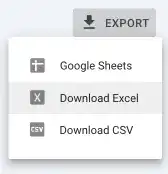

Start by exporting the data from the Pages section in Google Search Console. You can download this data as a CSV or Excel file.

This file will contain an overview of your site’s indexed and excluded pages, along with any errors encountered during crawling.

Once you have the file, share it with ChatGPT for analysis. For example, you could upload the report and ask:

Sample AI prompt:

Can you help me identify which pages have indexing issues, prioritizing them based on their severity?

ChatGPT will analyze the data and point out any common

patterns, such as pages that have been excluded due to noindex tags or errors caused by

broken links. It can also suggest fixes, like removing the noindex tag or fixing 404 errors.

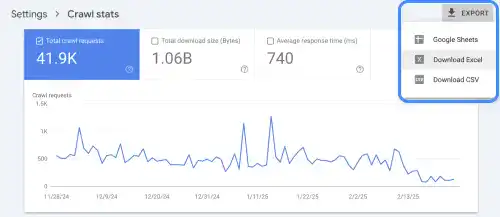

Step 2: Submitting the Crawl Stats Report for Crawling Issues

The Crawl Stats report in Google Search Console provides detailed insights into how often and how effectively Googlebot is crawling your website. This report is essential for understanding the health of your site in terms of crawling activity and detecting potential issues that might affect your site’s indexing. The Crawl Stats report offers more granular data, allowing you to identify patterns and problems in crawling with a higher level of detail than the general crawling overview.

How to Find Crawl Stats in Google Search Console

To access the Crawl Stats report, follow these steps:

- Access Google Search Console: First, log in to your Google Search Console account.

- Navigate to the Settings: On the left-hand menu, scroll down and click on Settings.

- Find Crawl Stats: Under the "Crawl" section, click on Crawl Stats. This report will show you how many pages Googlebot has crawled, how long it took to crawl them, and other important metrics.

Key Content of Crawl Stats

The Crawl Stats report offers several important metrics that provide a deeper understanding of your site’s crawling activity:

- Total Crawled Pages: This graph shows how many pages Googlebot has crawled on your site over the last 90 days.

- Crawl Duration: The average time Googlebot spends crawling your site.

- Response Codes: Information about the response codes (e.g., 200, 404, 5xx) returned during crawling.

- Crawl Errors: Information about any crawl errors Googlebot has encountered while trying to access your pages.

Crawl Stats is a vital report because it helps you monitor the overall crawling health of your site and identify any anomalies, like sudden drops in crawling frequency or increased response times, that could signal problems.

Export the Crawl Stats Report

In Google Search Console, download the Crawl Stats report as a CSV or Excel file. This file will contain key metrics such as the number of pages crawled, the response codes, and crawl duration.

Upload the Data to AI Chatbot and ask questions

Once you have this file, upload it to ChatGPT and ask:

Sample AI prompt:

Can you help me identify potential crawling issues, prioritizing them based on their severity?

ChatGPT can help you understand the causes of issues and suggest steps for improvement. For instance, if you notice a spike in crawl duration, the AI might suggest investigating server performance or reducing page load times. If there are errors like 5xx server issues, ChatGPT will highlight that these need to be fixed on the server side.

What is a Crawl Budget and Why is It Important?

Crawl budget refers to the number of pages a search engine's crawler, like Googlebot, will crawl on your website within a given timeframe. It varies depending on factors like the size of your website, its authority, and how frequently content is updated. Websites with high authority or frequent updates tend to have a larger crawl budget, ensuring that key pages are crawled and indexed. Conversely, websites with poor-quality or outdated content may receive a smaller crawl budget, which results in fewer pages being crawled and indexed.

Crawl budget is often one of the primary reasons for slow indexing, but it can be difficult to understand the full scope of what's going on. Identifying issues with crawl budgets, such as underutilization or inefficient allocation, can be complex without the right tools and data, making it harder to pinpoint why certain pages aren’t being indexed as quickly as expected.

Why is the Crawl Budget Important?

Understanding the importance of crawl budget can help you optimize your site for better indexing and visibility in search results. There are three primary reasons why crawl budget matters:

- Maximizing Indexing Efficiency: Crawl budget impacts how effectively a search engine indexes your website. If your crawl budget is limited or poorly managed, search engines may only crawl a portion of your site, causing important pages to be overlooked and preventing them from appearing in search results. Maximizing crawl budget ensures search engines focus on the most valuable pages, boosting your site’s visibility.

- Improving Crawl Efficiency: A well-managed crawl budget

ensures that search engine bots don’t waste time on low-value or irrelevant

pages. By using tools like robots.txt

and

noindextags, you can guide crawlers to avoid these unnecessary pages and prioritize valuable content. This ensures the crawl budget is used efficiently on your most important pages. - Preventing Server Overload: For large websites, improper crawl budget management can overload the server with too many crawling requests. This can lead to slow website performance, longer load times, and even server crashes. By controlling crawl frequency and depth, you can prevent excessive crawling and maintain optimal site performance for both search engines and users.

How to Optimize Crawl Budget

By managing crawl errors, blocking irrelevant pages, and prioritizing critical content, you can make the most of your crawl budget, leading to improved SEO performance. Here are some common practices to effectively manage crawl budget:

- Fixing Crawl Errors: Crawl errors like 404 or server errors can waste crawl budget, as search engines will repeatedly attempt to crawl these broken or inaccessible pages. By regularly checking for and fixing errors, you ensure that crawlers aren’t wasting time on pages that can’t be indexed. Keeping your site error-free maximizes crawl efficiency and ensures that valuable pages are crawled instead of encountering issues.

- Blocking Low-Value Pages: Using robots.txt or

noindextags, you can prevent search engine bots from crawling pages that don’t contribute to your SEO efforts, such as duplicate content or low-priority pages. By blocking these pages, you free up crawl budget for more important content, ensuring search engines focus on what matters most for your site. - Prioritizing Important Pages: Ensure that high-priority pages are easily accessible to crawlers. By organizing your site’s structure effectively and reducing the depth of important pages, you can help search engines crawl them more frequently. When pages are buried too deep in a site’s structure, search engines may skip crawling them due to the limited crawl budget.

- Monitoring Crawl Stats and Adjusting: Regularly monitor your site’s crawl stats in Google Search Console to track how often your pages are crawled and how efficiently Googlebot is accessing your content. If you notice fluctuations in crawl frequency or issues like slow response times, take action to improve server performance or optimize crawl depth. Adjusting these factors ensures that your crawl budget is being allocated in the most efficient way possible.

Optimizing crawl budget is a key factor in SEO. By fixing crawl errors, blocking low-value pages, prioritizing important content, and monitoring crawl stats, you ensure that search engines spend their crawl budget effectively. To address this complex issue, AI tools can help you better understand and resolve crawl budget challenges, ensuring that your site remains visible and properly indexed."