What Is Docker?

Docker is a transformative platform that simplifies application development, deployment, and scaling by using containers. Containers are lightweight, portable units that package software with all its dependencies, ensuring consistent performance across environments. In today’s fast-paced tech landscape, Docker is a critical tool for developers seeking efficiency, scalability, and reliability in managing modern applications.

In this section, we’ll cover the following topics:

- Introduction to Docker

- Docker Desktop: Bridging Local Development and Deployment

- Docker Engine: The Heart of Container Management

- Docker Hub: Connecting the Container Ecosystem

Introduction to Docker

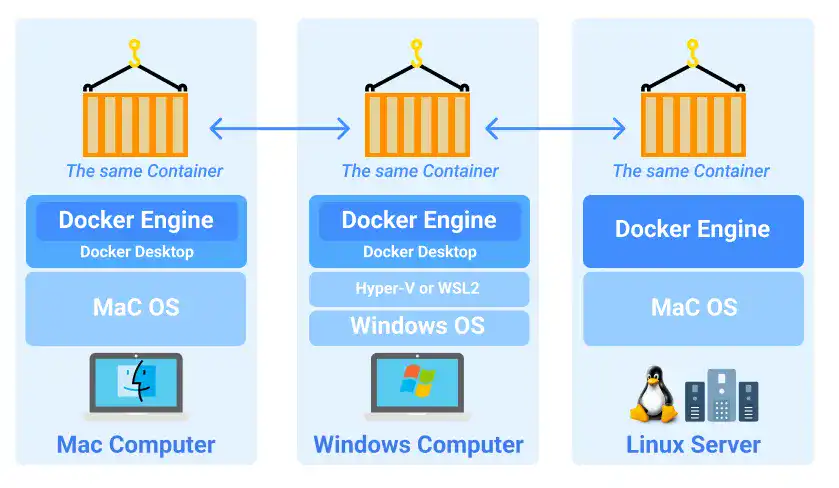

Docker is a platform designed to simplify containerization—a method of running applications in isolated environments. By creating lightweight, self-contained containers, Docker ensures software behaves the same regardless of where it is run, from a developer's laptop to a production server.

The Rise of Containerization

Containerization has become essential for modern software development. Unlike traditional deployment methods that require configuring environments repeatedly, containers provide prepackaged environments that are portable and consistent across different systems. This eliminates the "it works on my machine" problem and improves deployment efficiency.

Docker’s rise is tightly linked to the increasing adoption of microservices architectures, cloud environments, and the need for streamlined DevOps workflows. With containerization, applications can be developed, tested, and deployed in a predictable manner, making them more scalable and manageable.

Key Benefits of Docker in Modern Development

Docker offers numerous advantages, including:

- Portability: Applications bundled in containers run consistently, regardless of the underlying infrastructure.

- Scalability: Docker makes it simple to scale applications horizontally by deploying multiple container instances.

- Efficiency: Containers use fewer system resources compared to traditional virtual machines, reducing overhead.

- Collaboration: Developers and operations teams can share containerized applications easily through Docker Hub, streamlining workflows.

With a foundational understanding of Docker’s benefits, it's essential to explore its three key components that make container management efficient and scalable:

- Docker Desktop – A user-friendly tool that allows developers to build, test, and manage containers locally before moving to production.

- Docker Engine – The core runtime that handles container execution, networking, and automation through a client-server architecture.

- Docker Hub – A centralized repository that enables users to store, share, and distribute container images for seamless collaboration.

Together, these three components enable developers to streamline application development, ensure consistency, and accelerate deployment workflows. Let’s dive deeper into each of these components, starting with Docker Desktop.

Docker Desktop: Bridging Local Development and Deployment

Docker Desktop is a comprehensive solution designed for developers working on macOS or Windows. It provides a seamless experience for building, testing, and managing containerized applications in a local development environment.

Key Features of Docker Desktop:

- Docker Engine Integration: Comes with Docker Engine, allowing developers to run containers without needing a separate installation.

- Graphical User Interface (GUI): Provides an easy-to-use interface for managing containers, images, and networks visually.

- Kubernetes Support: Includes built-in Kubernetes for developers who want to experiment with container orchestration.

- File Sharing and Volume Management: Enables integration between local file systems and running containers, simplifying data persistence.

Docker Desktop eliminates the complexity of setting up container environments manually. With its GUI and command-line support, developers can effortlessly build, test, and debug applications in containers. The ability to mirror production environments locally ensures fewer surprises when deploying to cloud or on-premise servers.

Docker Engine: The Heart of Container Management

At the core of Docker’s functionality is Docker Engine. It is responsible for managing containers through a client-server architecture. Whether installed as part of Docker Desktop or independently on a server, Docker Engine ensures consistent management of containers across systems. Its lightweight design and support for APIs make it a powerful tool for automating containerized workflows.

How Docker Engine Works: Client-Server Model

Docker Engine operates through a client-server architecture, where users issue commands from a client, and the server (managed by the dockerd daemon) processes and executes them.

This design ensures seamless container management across different

environments, whether on a local machine or remote infrastructure.

When a user runs a Docker command, the client sends requests to the server, which executes them using the daemon. This model enables remote management, allowing users to control containers on different machines, automate deployments, and integrate Docker with other tools.

1. Dockerd Daemon – The Heart of Docker Engine

The dockerd daemon is the background process that powers Docker Engine, responsible for executing container-related tasks. It continuously listens for API requests and orchestrates key operations, including:

- Managing the container lifecycle (creating, running, stopping, and deleting containers).

- Handling images, networks, and storage, ensuring smooth communication between containers.

- Monitoring system resources to optimize container performance and prevent overuse.

Without the dockerd daemon, Docker Engine cannot

function, as it serves as the central component executing all Docker

operations.

2. API Interfaces – Automating and Extending Docker

Docker Engine exposes RESTful APIs, enabling developers and system administrators to integrate Docker with external applications and automate workflows. These APIs provide:

- Remote container management without direct CLI access.

- Automation of repetitive tasks, such as scaling containers dynamically based on demand.

- Seamless integration with DevOps tools, CI/CD pipelines, and container orchestration platforms like Kubernetes.

By leveraging Docker’s API interfaces, businesses can build automated and scalable containerized environments, reducing manual effort and improving deployment efficiency.

3. Command Line Interface (CLI) – A User-Friendly Control Tool

The Docker CLI is a command-based interface that allows users to interact with Docker Engine efficiently. With simple commands, developers and system administrators can:

- Create, start, stop, and remove containers quickly.

- Pull, build, and push images to repositories like Docker Hub.

- Manage networking and storage configurations for optimized container performance.

- Monitor system resource usage to ensure containers are running efficiently.

The CLI simplifies complex container operations, making Docker more accessible and easier to use, even for those new to containerization.

Docker Engine’s client-server architecture, combined with its daemon, API interfaces, and CLI, makes it a robust and flexible tool for managing containerized applications. By integrating these components, Docker ensures efficiency, automation, and scalability in modern application development and deployment.

Docker Hub: Connecting the Container Ecosystem

Docker Hub is a cloud-based repository that allows users to store, manage, and share container images easily. It serves as a critical component in the Docker ecosystem, enabling teams to collaborate and streamline deployments across different environments.

Key Features of Docker Hub:

- Centralized Image Repository: Developers can push and pull images to ensure consistency across deployments.

- Official Docker Images: Provides trusted, pre-configured images for technologies like Python, Node.js, MySQL, and more.

- Public and Private Repositories: Supports both open-source sharing and private storage for enterprise use.

- Automated Builds: Enables Continuous Integration (CI) by automatically building images from source code repositories like GitHub.

Docker is an essential tool in modern application development, providing a structured way to containerize applications, manage environments, and streamline deployment workflows. With Docker Desktop for local development, Docker Engine for robust container management, and Docker Hub for image distribution, Docker enables scalability, efficiency, and collaboration across development teams. By leveraging its powerful ecosystem, developers can simplify workflows, enhance security, and accelerate software delivery.

FAQ: Understanding Docker and Its Components

What is Docker?

Docker is a platform that simplifies application development, deployment, and scaling by using containers. Containers package software with all its dependencies, ensuring consistent performance across environments.

Why is containerization important in modern software development?

Containerization provides prepackaged environments that are portable and consistent across different systems, eliminating the "it works on my machine" problem and improving deployment efficiency.

What are the key benefits of using Docker?

Docker offers portability, scalability, efficiency, and collaboration. It allows applications to run consistently across infrastructures, simplifies scaling, reduces resource usage, and facilitates sharing through Docker Hub.

What is Docker Desktop?

Docker Desktop is a tool for developers on macOS or Windows, providing a seamless experience for building, testing, and managing containerized applications locally with features like Docker Engine integration and Kubernetes support.

How does Docker Engine manage containers?

Docker Engine uses a client-server architecture, where the dockerd daemon processes commands from the client, managing container lifecycle, images, networks, and storage through APIs and a command-line interface.